Meta's Community Notes: Impact on Content Moderation in Q1 2025

Meta's latest transparency report highlights a 50% reduction in content moderation mistakes in the US, attributing this success to Community Notes. The report (view here) covers content removals, government requests, and widely viewed content. While the decrease in errors appears positive, questions remain about potential downsides.

Community Notes Expansion and Concerns

Meta has expanded Community Notes to Reels and Threads replies, even adding a feature to request notes. While user input on content moderation is valuable, concerns exist about the system's effectiveness. A key issue is the limited visibility of notes. Research suggests that many notes are never displayed, potentially hindering their ability to curb misinformation.

A 50% drop in enforcement mistakes may not be entirely positive. It could indicate a decrease in overall enforcement, potentially allowing more harmful content to spread. This is particularly concerning given the scale of Meta's platforms.

Key Findings from the Q1 2025 Report

The report reveals several notable trends:

- Increased reports of nudity/sexual content on Facebook.

- A rise in content removed under the "dangerous organizations" policy on Instagram, attributed to a bug.

- Increased removal of spam on Instagram.

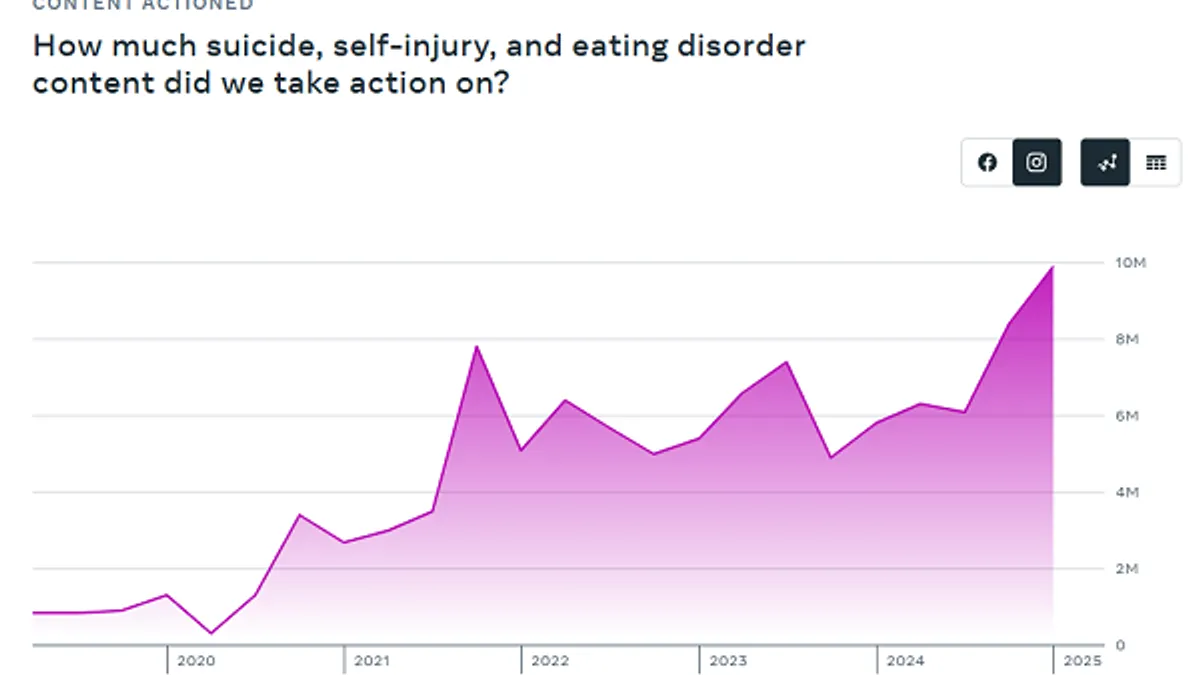

- A concerning rise in suicide, self-injury, and eating disorder content.

- A 12% decline in proactive detection of bullying and harassment.

- A 7% reduction in proactive detection of hate speech.

Meta attributes some of these trends to improved detection technology using large language models (LLMs). However, the decline in proactive detection of harmful content raises concerns about the effectiveness of the new moderation approach.

"Early tests suggest that LLMs can perform better than existing machine learning models...we are beginning to see LLMs operating beyond that of human performance for select policy areas."

Government Requests and Fake Accounts

Government information requests remained steady, with India leading the requests. Meta also reports that fake accounts represent approximately 3% of monthly active Facebook users, a decrease from previous estimates.

Widely Viewed Content and External Links

The report shows that 97.3% of post views on Facebook in Q1 2025 did not include external links, a slight improvement from the previous quarter. While some publishers report increased referral traffic from Facebook, the overall trend suggests limited external link engagement.

Conclusion: A Mixed Bag

Meta's shift to Community Notes presents a complex picture. While some data points are positive, others raise concerns about potential unintended consequences. The lack of data on missed moderation opportunities makes it difficult to fully assess the impact of this change. Further analysis and transparency are needed to determine the true effectiveness of Meta's new moderation approach.